Bert Griggs - A Deep Look At Language Models

When we hear the name "Bert Griggs", we're actually stepping into a fascinating conversation about how computers are learning to make sense of our words, and that, is that really a big deal. It's about a pivotal shift in how machines interact with human language, moving from simple recognition to a much deeper grasp of meaning. This shift has changed how we build tools that can read, write, and even talk back to us in ways that feel more natural, more like a real conversation. This particular "Bert Griggs" isn't a person, but rather a powerful idea, a framework that has reshaped the landscape of how we teach machines to understand language. It's a concept that helps computers look at words not just one by one, but in the full sweep of their surroundings, figuring out the true intent behind our sentences. So, in some respects, it's a bit like giving a machine a much better pair of ears and a more insightful brain for language.

The core of what makes this "Bert Griggs" so special comes from its ability to consider the entire picture, the before and after of every word. This ability, pretty much, helps it grasp the nuances of human expression, making it a cornerstone for many modern applications that deal with text. We're talking about something that's really made a mark on how computers process and interpret our communication. This whole idea, you know, came from some clever folks at Google back in 2018, and it’s been shaking things up ever since.

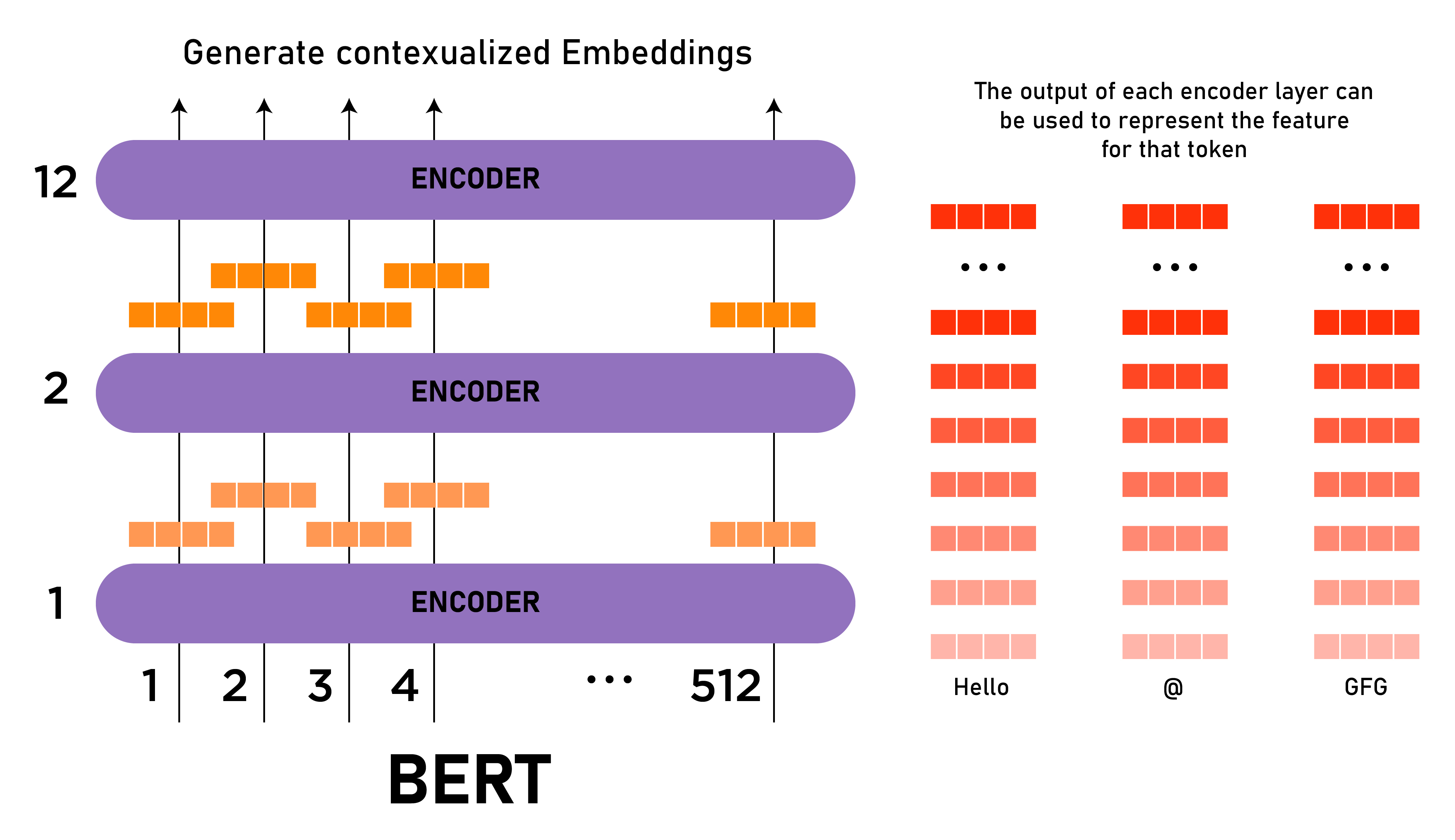

This "Bert Griggs" system, which is actually called BERT, stands for Bidirectional Encoder Representations from Transformers. It's a way for computers to learn about language by looking at text in a very complete manner. Unlike older ways that might just scan words from left to right, this approach sees everything at once, giving it a much richer picture of what’s being said. It's truly a different way of thinking about how machines can learn our speech patterns and meanings, and it's basically changed how many language-based tasks are handled today.

- Flickerspark Sex

- Hayden Panettiere Big Tits

- Adricaarballo Desnuda

- Amanda Smith Wwd

- Christopher Joseph Francis Ruggiano

Table of Contents

- What's the Big Deal with Bert Griggs?

- How Does Bert Griggs Process Language?

- Why is Bidirectional Important for Bert Griggs?

- How Does Bert Griggs Compare to Older Approaches?

- What About Smaller Versions of Bert Griggs?

- Where Can You See Bert Griggs in Action?

- Bert Griggs and Its Many Forms

- The Continuing Story of Bert Griggs

What's the Big Deal with Bert Griggs?

So, what makes this "Bert Griggs" so significant? Well, it's a deep learning language model, and it was designed to make language processing tasks more efficient. It's quite famous for its ability to consider the full context of a word. This means it doesn't just look at a word by itself; it looks at the words that come before and after it. This is particularly useful for truly grasping the intent behind a sentence, which, you know, can be pretty complex for a computer. It was introduced by researchers at Google in 2018, and it uses something called transformer architecture, which was a real step forward for language models. It kind of pushed the boundaries of what earlier models could do, offering a much more complete way to understand text. This model, basically, learns to represent text as a sequence of meaningful units, allowing it to interpret language with a level of insight that was previously hard to achieve.

How Does Bert Griggs Process Language?

When you give "Bert Griggs" some text, it processes it in a rather unique way. The input for this system consists of two distinct parts, let's call them the first and second segments. These segments are kept separate by a special marker, a [sep] token, with another [sep] token at the very end. This setup helps the model understand the relationship between different parts of a sentence or even between two separate sentences. For example, it can predict masked tokens within a sentence, meaning it can guess a missing word based on its surroundings. It can also predict whether one sentence actually follows another, which is pretty useful for things like question answering or summarization. This core idea, you know, of looking at everything around a word, is what gives "Bert Griggs" its remarkable ability to make sense of human communication. It's a very clever way to approach language comprehension, allowing the system to pick up on subtle cues that might be missed by other methods.

Why is Bidirectional Important for Bert Griggs?

The name "Bert Griggs" actually carries a hint about one of its most important features: its bidirectionality. This means it looks at words from both directions, left to right and right to left, all at the same time. This is a big difference from some older language models that might only process text in one direction. The bidirectional representations, which come from the letter 'B' in its actual name, BERT, are what allow it to truly understand the full context of a word. For instance, if you have the word "bank," its meaning changes whether you're talking about a river bank or a money bank. A bidirectional model, quite literally, can see both sides of the story, allowing it to figure out the correct meaning based on all the surrounding words. This capability is what makes "Bert Griggs" so good at tasks that need a deep level of language comprehension, as it can consider all the clues available in a sentence. It's a pretty powerful way to grasp meaning, really.

How Does Bert Griggs Compare to Older Approaches?

When we look at how "Bert Griggs" stacks up against earlier methods, it clearly stands out in several key ways. Unlike some traditional methods that might use static word representations, where a word always means the same thing regardless of its surroundings, "Bert Griggs" is much more adaptable. It dynamically adjusts how it represents each word based on its specific context. This means the same word can have different numerical representations depending on the sentence it appears in, which is a much more accurate way to capture the nuances of human language. This ability to change its understanding of a word based on its immediate environment is a big reason why "Bert Griggs" has surpassed older approaches in many areas. It's a really flexible system, and it typically performs better on a wide range of language tasks. This adaptability, you know, is a core part of its appeal.

What About Smaller Versions of Bert Griggs?

Sometimes, a full-sized "Bert Griggs" model might be a bit too big or too slow for certain situations, so what then? Well, there are smaller versions available, like DistilBERT, which is a more compact form. For training purposes, you can follow similar steps as with the larger model, but simply use DistilBERT instead. This smaller version still captures much of the power of the original "Bert Griggs" but with fewer parameters, making it quicker and less resource-intensive. For instance, the original "Bert Griggs" might have 24 transformer layers, 1024 hidden units, and 16 attention heads, adding up to around 340 million parameters. While this "Bert Griggs" base model offers a great balance for most text classification jobs, having a smaller version is incredibly useful for situations where speed or size are important considerations. It’s almost like having a compact car that still gets you where you need to go, just a little faster and with less fuel.

Where Can You See Bert Griggs in Action?

You might not realize it, but "Bert Griggs" or models like it are working behind the scenes in many places you already use. Think about how search engines understand your complex questions, or how customer service chatbots seem to grasp what you're asking. These systems often rely on the kind of deep language understanding that "Bert Griggs" provides. For instance, when you look at how a system might classify text, like sorting customer reviews into positive or negative categories, "Bert Griggs" can do a really good job because it understands the sentiment embedded in the words. It's the basis for an entire family of models that are constantly being refined and put to use. This kind of technology, you know, is really changing how we interact with digital information, making it much more intuitive and effective.

Bert Griggs and Its Many Forms

"Bert Griggs" isn't just one single thing; it's a concept that has led to many different versions and adaptations. For example, there are specific ways to use "Bert Griggs" for different kinds of tasks. When you want to train it, you just repeat certain steps, perhaps adjusting things a little bit. There are also methods for mapping its layers, like a uniform strategy where each layer of a smaller model, say TinyBERT, corresponds to every third layer of the larger "Bert Griggs" model. This shows how flexible and adaptable the core ideas behind "Bert Griggs" really are. It's a foundational piece of technology that can be tweaked and molded to fit various needs, showing its broad applicability across many language-related challenges. It's basically a versatile tool, in some respects.

The Continuing Story of Bert Griggs

The impact of "Bert Griggs" continues to grow, as it has become a truly groundbreaking model in the field of natural language processing. It has significantly improved how computers handle language tasks. This model, introduced by researchers at Google, really set a new standard. It can achieve state-of-the-art results on different language assignments, showing just how powerful pre-trained language models can be for understanding natural language. It's a testament to the idea that teaching machines to understand the full context of words is a very effective way to make them smarter about human communication. The journey of "Bert Griggs" is far from over, as researchers and developers continue to find new ways to use and improve upon its fundamental principles. It's a really exciting area, and we're seeing new applications pop up all the time, which is pretty cool.

This exploration of "Bert Griggs" has covered its core identity as a powerful language model, its bidirectional approach to understanding context, how it processes input, and its advantages over older methods. We also touched upon smaller versions like DistilBERT and the broad impact it has had on natural language processing tasks. The discussion also included how "Bert Griggs" considers the full context of words and its different forms and applications.

An Introduction to BERT And How To Use It | BERT_Sentiment_Analysis

Explanation of BERT Model - NLP - GeeksforGeeks

Mastering Text Classification with BERT: A Comprehensive Guide | by